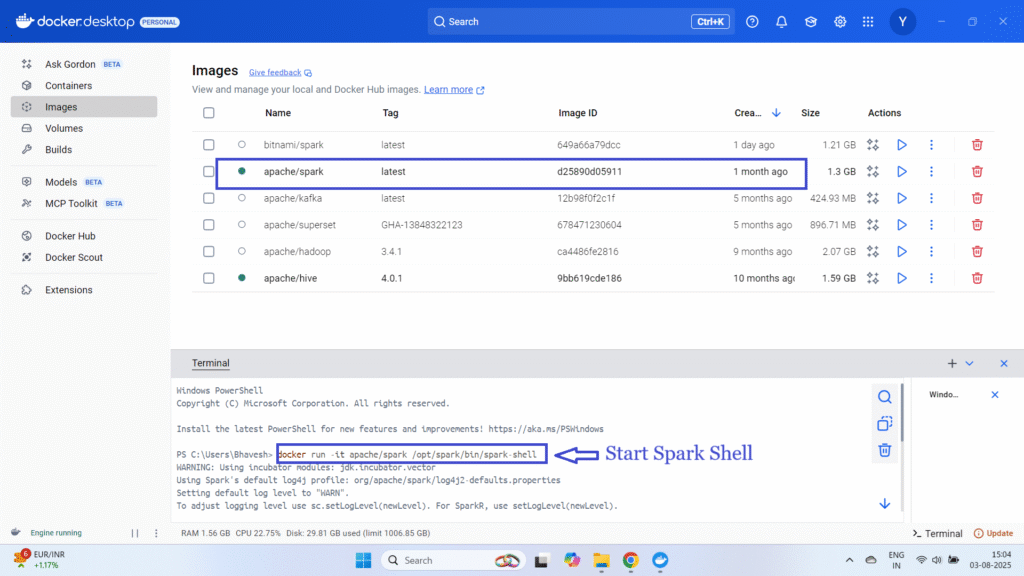

Here is a step-by-step guide for practicing Apache Spark using the official Apache Spark Docker image on Docker Desktop. This approach is cross-platform (works for Windows, Mac, and Linux), completely containerized, and does not require Spark installed on your local system

1. Prerequisites

Install Docker Desktop: Download and install Docker Desktop from the official Docker website. Ensure it is running on your machine.

(Optional) Docker Compose: Modern Docker Desktop includes Docker Compose by default.

2. Pull the Official Apache Spark Image

Open a terminal or command prompt and run:

docker pull apache/spark:latest

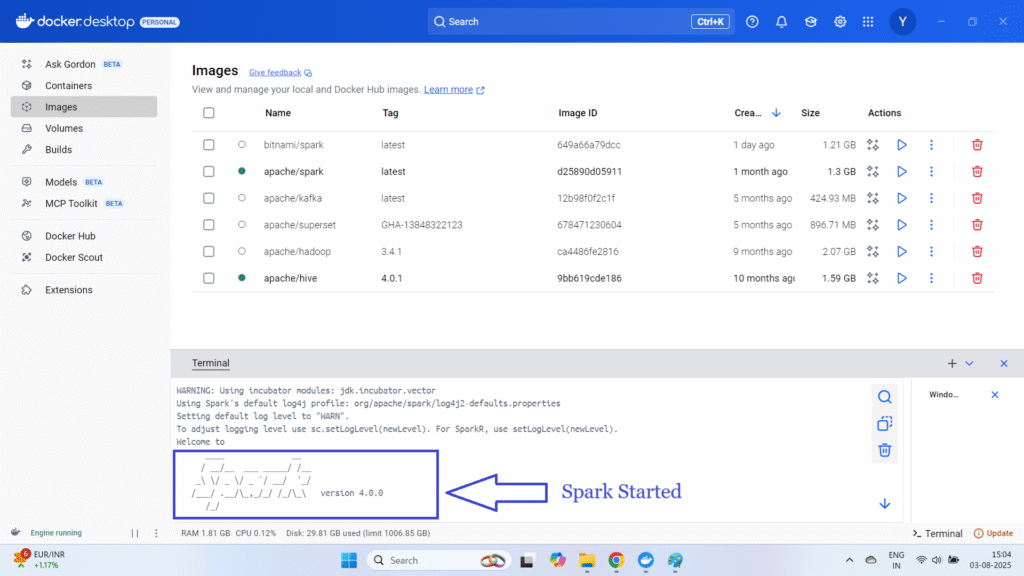

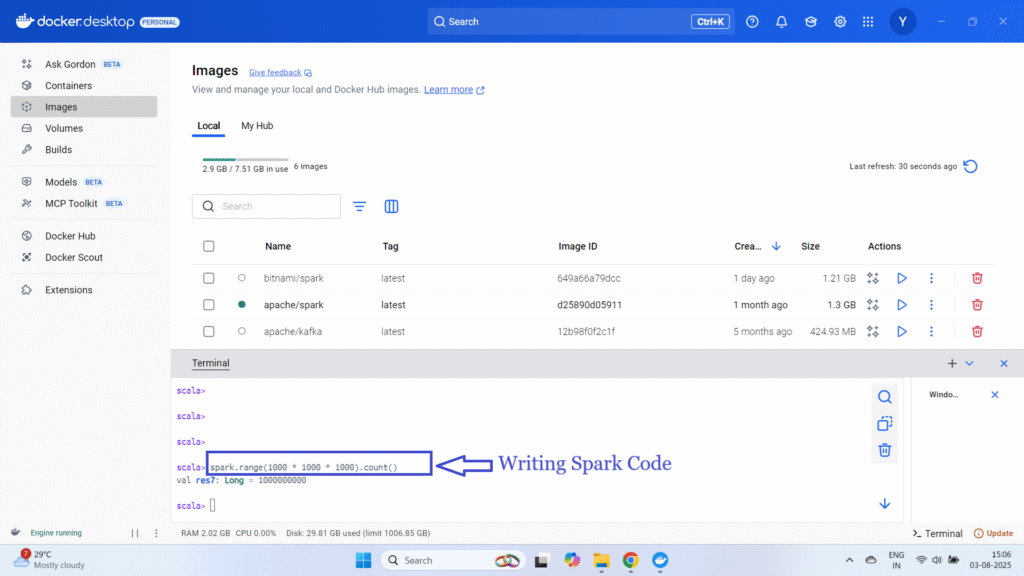

3. Running Spark (Standalone)

Quick Single-Container (Local Mode)

You can launch a basic, single-node Spark container for simple experiments (e.g., PySpark interactive shell):

docker run -it –rm apache/spark:latest /opt/spark/bin/spark-shell

Or for PySpark:

docker run -it –rm apache/spark:latest /opt/spark/bin/pyspark